Extracting GZip & Tar Files Natively in .NET Without External Libraries

🎯 TL;DR: Native .tar.gz Extraction in .NET 7 Without External Dependencies

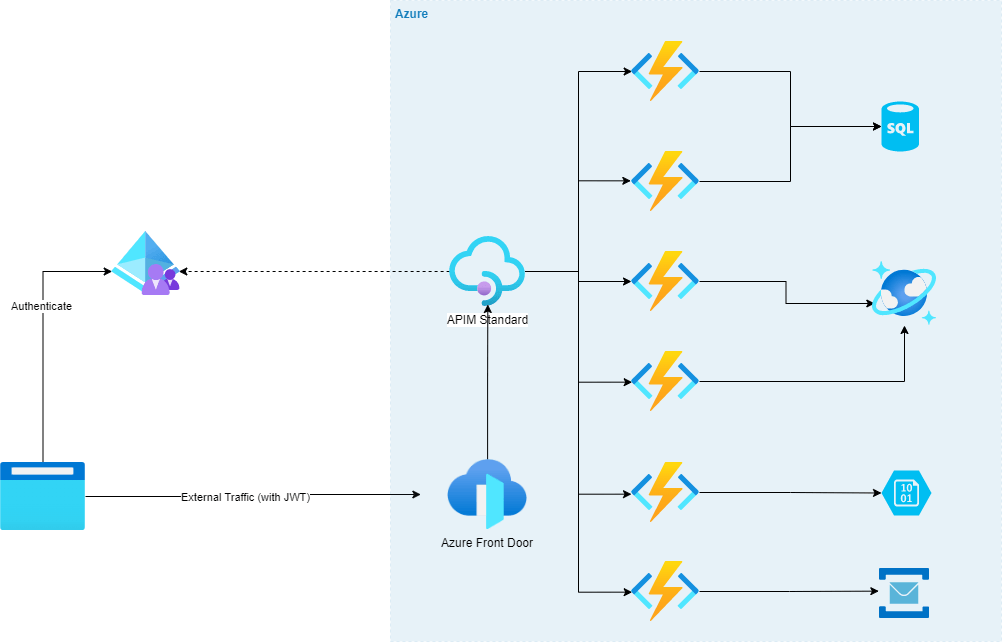

Processing compressed .tar.gz files in Azure Functions traditionally required external libraries like SharpZipLib. Problem: External dependencies increase complexity and security surface area. Solution: .NET 7 introduces native

System.Formats.Tarnamespace alongside existingSystem.IO.Compressionfor GZip, enabling complete .tar.gz extraction without external dependencies. Implementation usesGZipStreamfor decompression andTarReaderfor archive extraction with proper entry type filtering and async operations.

Introduction

Imagine being in a scenario where a file of type .tar.gz lands in your Azure Blob Storage container. This file, when uncompressed, yields a collection of individual files. The trigger event for the arrival of this file is an Azure function, which springs into action, decompressing the contents and transferring them into a different container.

In this context, a team may instinctively reach out for a robust library like SharpZipLib. However, what if there is a mandate to accomplish this without external dependencies? This becomes a reality with .NET 7.

In .NET 7, native support for Tar files has been introduced, and GZip is catered to via System.IO.Compression. This means we can decompress a .tar.gz file natively in .NET 7, bypassing any need for external libraries.

This post will walk you through this process, providing a practical example using .NET 7 to show how this can be achieved.

.NET 7: Native TAR Support

As of .NET 7, the System.Formats.Tar namespace was introduced to deal with TAR files, adding to the toolkit of .NET developers:

System.Formats.Tar.TarFileto pack a directory into a TAR file or extract a TAR file to a directorySystem.Formats.Tar.TarReaderto read a TAR fileSystem.Formats.Tar.TarWriterto write a TAR file

These new capabilities significantly simplify the process of working with TAR files in .NET. Lets dive in an have a look at a code sample that demonstrates how to extract a .tar.gz file natively in .NET 7.