Pimp My Terminal - Terminal Customization with Oh My Posh - A Cloud Native Terminal Setup

🎯 TL;DR: Automated Oh My Posh Terminal Setup for Cloud Native Development

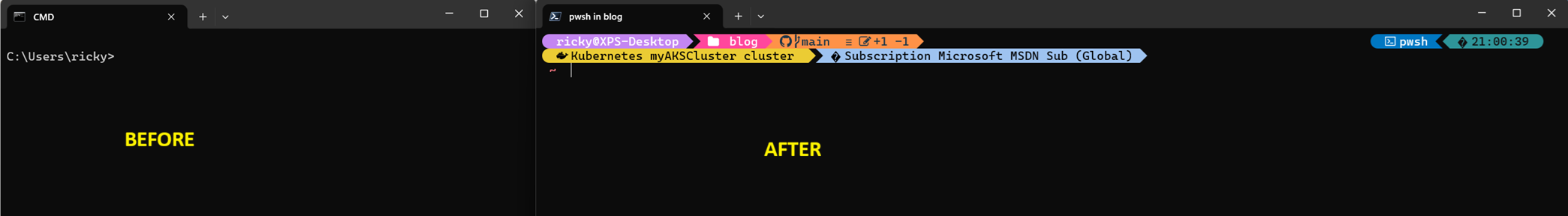

Every new machine or fresh Windows install means reconfiguring your terminal environment from scratch. Problem: Manually setting up Oh My Posh, installing Nerd Fonts, and configuring custom themes is tedious and error-prone across multiple machines.

Solution: (A single PowerShell script available on GitHub https://github.com/Ricky-G/script-library/blob/main/pimp-my-terminal.ps1) that automates the entire process - installing Oh My Posh via winget, deploying a Nerd Font, Terminal-Icons module, creating a custom “Cloud Native Azure” theme optimized for Kubernetes and Azure workflows, and configuring your PowerShell profile with PSReadLine enhancements.

Prerequisites: Enable script execution with

Set-ExecutionPolicy -ExecutionPolicy RemoteSigned -Scope CurrentUserbefore running. This approach transforms the multi-hour setup process into a one-command operation, providing immediate visual context for Git branches, Kubernetes clusters, Azure subscriptions, and command execution times - critical information for modern cloud native development.

Recently, I found myself setting up yet another development machine, and as I stared at the blank PowerShell terminal, I realized I’d reached my limit with manual terminal configuration. Every new machine or clean install meant the same tedious process: download Oh My Posh, find a Nerd Font installer, copy configuration files, edit PowerShell profiles, and spend 30 minutes getting everything just right.

The frustration wasn’t just about aesthetics - a properly configured terminal is a productivity multiplier. When you’re constantly switching between multiple Git repositories, Kubernetes clusters, and Azure subscriptions throughout the day, having that contextual information immediately visible saves countless keystrokes and eliminates mental overhead.

This blog post shares my automated solution: a single PowerShell script that takes a bare Windows terminal and transforms it into a fully-configured, cloud native-ready development environment in under 5 minutes. Whether you’re setting up a new machine, rebuilding after a Windows update disaster, or just want to standardize terminal configuration across your team, this automation eliminates the manual work.

Quick Start - Get Up and Running in 5 Minutes

Want to skip the details and just get started? Here’s everything you need to run the automation script:

Step 1: Enable Script Execution

Open PowerShell as Administrator and run:

1 | Set-ExecutionPolicy -ExecutionPolicy RemoteSigned -Scope CurrentUser |

When prompted, type Y and press Enter.

Step 2: Download and Run the Script

1 | # Download and run the automation script |

The script will automatically install:

- ✅ Oh My Posh via winget

- ✅ MesloLGM Nerd Font

- ✅ Terminal-Icons PowerShell module

- ✅ Cloud Native Azure theme

- ✅ PSReadLine enhancements

- ✅ Custom keyboard shortcuts

Step 3: Configure Your Terminal Font

After the script completes, configure your terminal font:

Windows Terminal:

- Open Settings (

Ctrl + ,) - Go to Profiles → Defaults → Appearance

- Set Font face to:

MesloLGM Nerd Font - Save and restart terminal

VS Code:

- Open Settings (

Ctrl + ,) - Search for “terminal font”

- Set Terminal › Integrated: Font Family to:

MesloLGM Nerd Font

Done! Open a new terminal and enjoy your beautiful, cloud native-ready prompt.

Understanding Oh My Posh: The Modern Prompt Engine

Before diving into the automation, it’s worth understanding what Oh My Posh brings to the table and why it’s become the de facto standard for PowerShell prompt customization.