Application Gateway Ingress Controller For AKS

Recently I ran into an interesting issue with an AKS cluster running 2000+ services. There is nothing wrong in running 2000+ services that’s what Kubernetes is there for, scale! but the interesting aspect that caught my attention was trying to get the Applicaiton Gateway Ingress Controller (AGIC) to ingress to all these services. I had worked with Istio and NGINX for ingress into AKS with no issues and never AGIC, so I had to try this to see where it worked well, what the advantages are and where the limitations are.

Application Gateway

Application Gateway (App Gateway) is a well-established layer 7 service that has been around for a while, some of the major features are:

- URL routing

- Cookie-based affinity

- SSL termination

- End-to-end SSL

- Support for public, private, and hybrid web sites

- Integrated web application firewall

- Zone redundancy

- Connection draining

This post isn’t focused on the App Gateway itself, it’s more about how and what it can do as an ingress controller for AKS. You can find out more about App Gateway and all abouts its features here

TLDR;

Benefits of AGIC

- Direct connection to the pods without an extra hop, this results in a performance benefit up to 50% lower network latency compared to in-cluster ingress

- Could make a huge difference in performance and latency sensitive applications and workloads

- If going the AKS add-on route then it becomes fully managed and updated

- In cluster ingress consumes and competes for AKS compute/memory resources where was with App Gateway separated from the cluster it won’t be leeching any of the AKS compute

- Full benefits of the Application Gateway such as WAF, cookie-based affinity, ssl termination amongst many others

Limitations

- Application Gateway has some backend limits. Backend pools are limited to 100.

- Application Gateway does have a pricing implication

- Routing is directly to pod IP’s rather than the ClusterIP of the service. There is a feature request open for this

Application Gateway Ingress Controller (AGIC)

AGIC went to GA around the end of 2019 and offered the possibilities of hooking up an App Gateway as an attractive alternative for ingress into an AKS cluster. Before moving any further with AGIC, we need to understand at a high-level how networking works in AKS.

There are two main network models:

Kubenet networking

- Default option for Kubernetes out of the box

- Each Node receives an IP from the Azure virtual network subnet

- Pods in the node are not associated to the Azure vnet, they are assigned an IP address from the PodIPCidr and a route table is created by AKS

Azure Container Networking Interface networking (CNI)

- Each pod itself receives an IPaddress from the Azure virtual network subnet

- Pods can be directly reached via their private IP from connected networks

- Pods can access resources in the vnet directly with out issues (e.g.: function app in the same vnet)

It’s important to note, once you create an AKS cluster with a given network model you can’t change it; you will have to create a new one. There are advantages and disadvantages in both models which are listed in detail in this link.

One key consideration to highlight is:

- Kubenet - /24 IP range can support up to 251 nodes (each subnet reserves the first 3 IP addresses for management operations). Given the maximum nodes per pod in Kubenet is 110, this configuration can support a maximum of 251 * 110 = 27,610 pods

- CNI - the same /24 IP range can support a maximum of 8 nodes (CNI has a max of thirty pods per node). So, this configuration can support a maximum of 240

When it comes to CNI you will have to plan for the IP addresses, you might need to a /16 range to get a bigger node count. There are also limitations with the kubenet that will need to be taken into consideration.

With the AKS networking models out of the way, let’s look at AGIC; regardless of which model is chosen, the goal for AGIC is to ingress directly to the pod, a simple representation of this can be seen below. AGIC when deployed, runs in a pod in the AKS cluster and watches for changes, when changes are detected (i.e.: a new pod has been added or existing pod removed) these IP changes are propagated to the App Gateway via the Azure Resource Manager.

If we went with the CNI networking model, then the pod would get IP address from the vnet and there would be a mapping in the App Gateway. Alternatively, with the Kubenet model this is how App Gateway will be setup, it will try to assign the same routable created by AKS to App Gateway’s subnet.

It’s important to note, whichever model you choose the App Gateway will always connect directly to the pod and this is by design.

Deploying AGIC

AGIC can be deployed in two ways either using Helm or as an AKS add-on. Each has their pros and cons, the key benefit of going via an AKS add-on will be that it will be fully managed and auto updated by Azure (i.e.: all updates, patching etc. for the AGIC will be taken care of automatically) whereas with Helm you will have to do that yourself.

Let’s go ahead and deploy a demo AKS cluster with AGIC and see it in action to understand exactly what is going on. For the sake of simplicity, this demo will be creating an AKS cluster with CNI networking model and deploying the AGIC as and AKS add-on.

Create an AKS cluster

Login and set the right subscription

1 | az login |

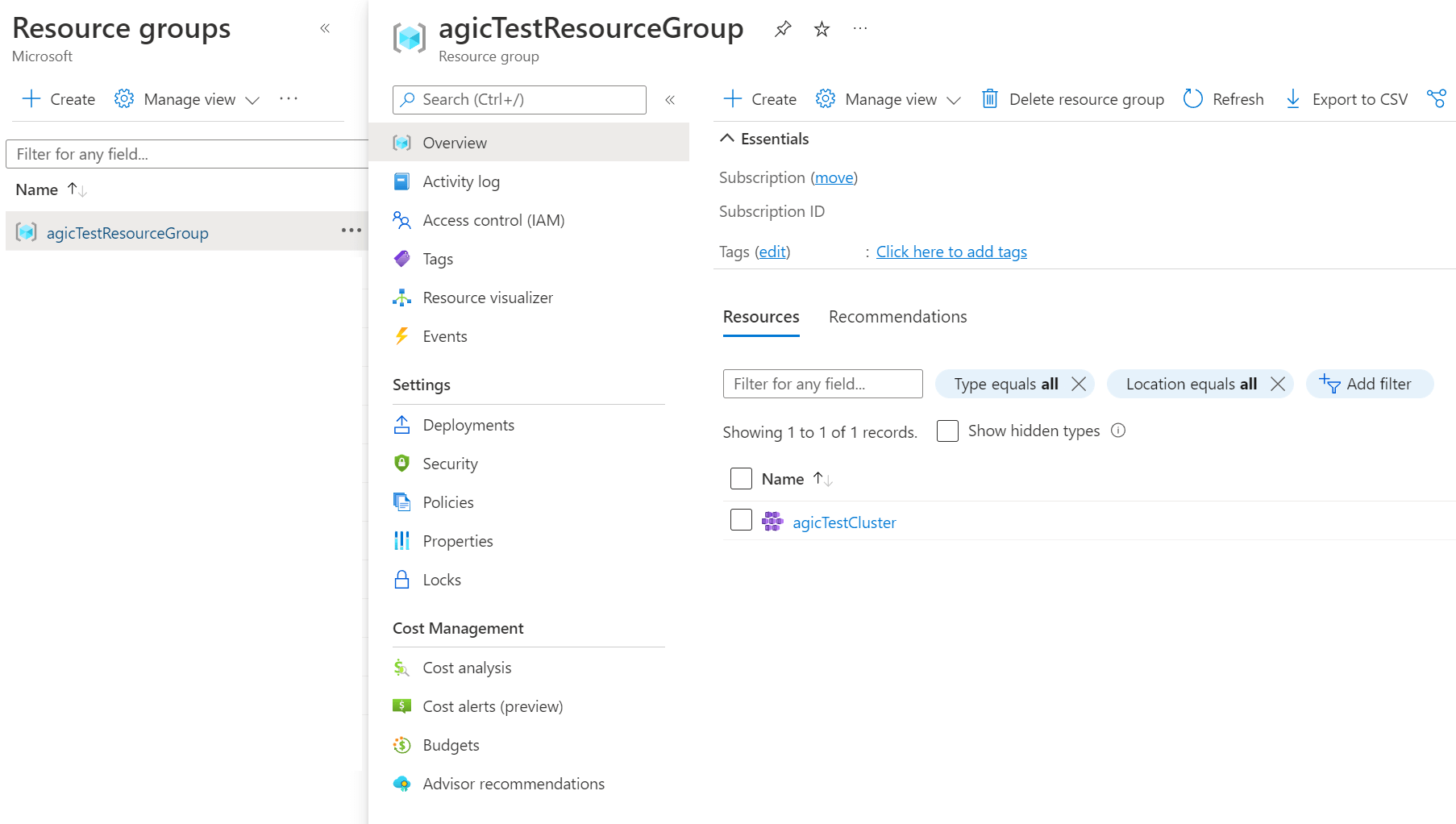

Create a new resource group

1 | az group create --name agicTestResourceGroup --location eastus |

Here we are creating a new AKS cluster with CNI networking model (–network-plugin azure) and we are setting up App Gateway as ingress and in this instance we are saying our App Gateway’s name is “testAppGateway” which doesn’t exist and will be created for us

Create AKS cluster

1 | az aks create -n agicTestCluster -g agicTestResourceGroup --network-plugin azure --enable-managed-identity -a ingress-appgw --appgw-name testAppGateway --appgw-subnet-cidr "10.225.0.0/16" --generate-ssh-keys |

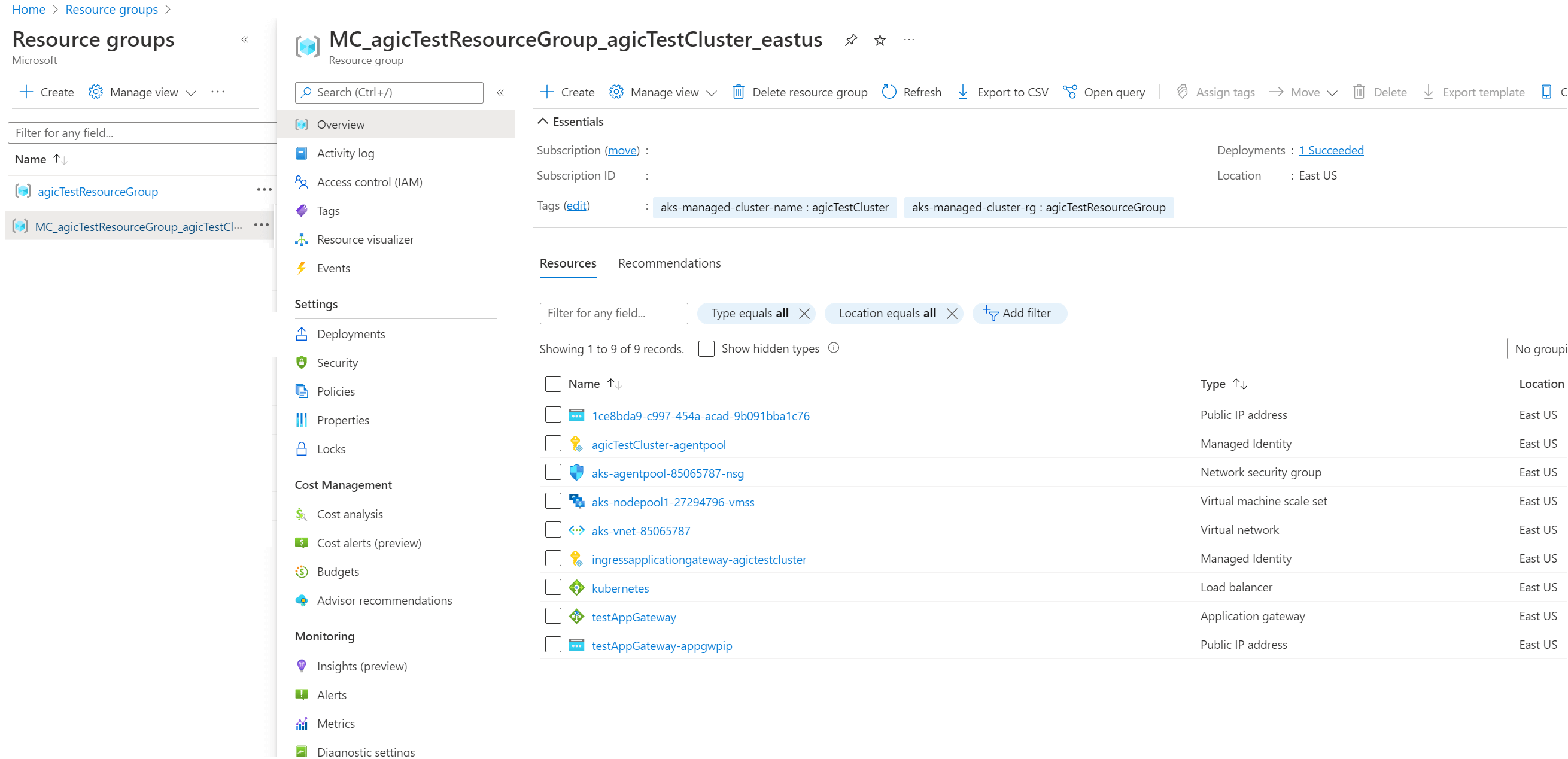

If we go into the Azure Portal, we can see two resource groups (one of them is what we created and this where the Azure managed AKS control plane is), the other resource group (MC_agicTestResourceGroup_agicTestCluster_eastus) is where the node pool, vnet, App Gateway etc all live, this resource group gets created automatically for us as part of the az aks create command.

Deploy a sample API

Now we have the AKS cluster up and running with AGIC deployed as an add-on, let’s deploy a sample API app and set ingress through the App Gateway.

Get credentials to the AKS cluster

1 | az aks get-credentials -n agicTestCluster -g agicTestResourceGroup |

Deploy a sample API

1 | kubectl apply -f https://gist.githubusercontent.com/Ricky-G/59eb109913bd45d3e9229f9cf0a97edc/raw/b336047feecd9fd89fbe1a9627ac385b525124fe/sample-api-aks-deployment.yaml |

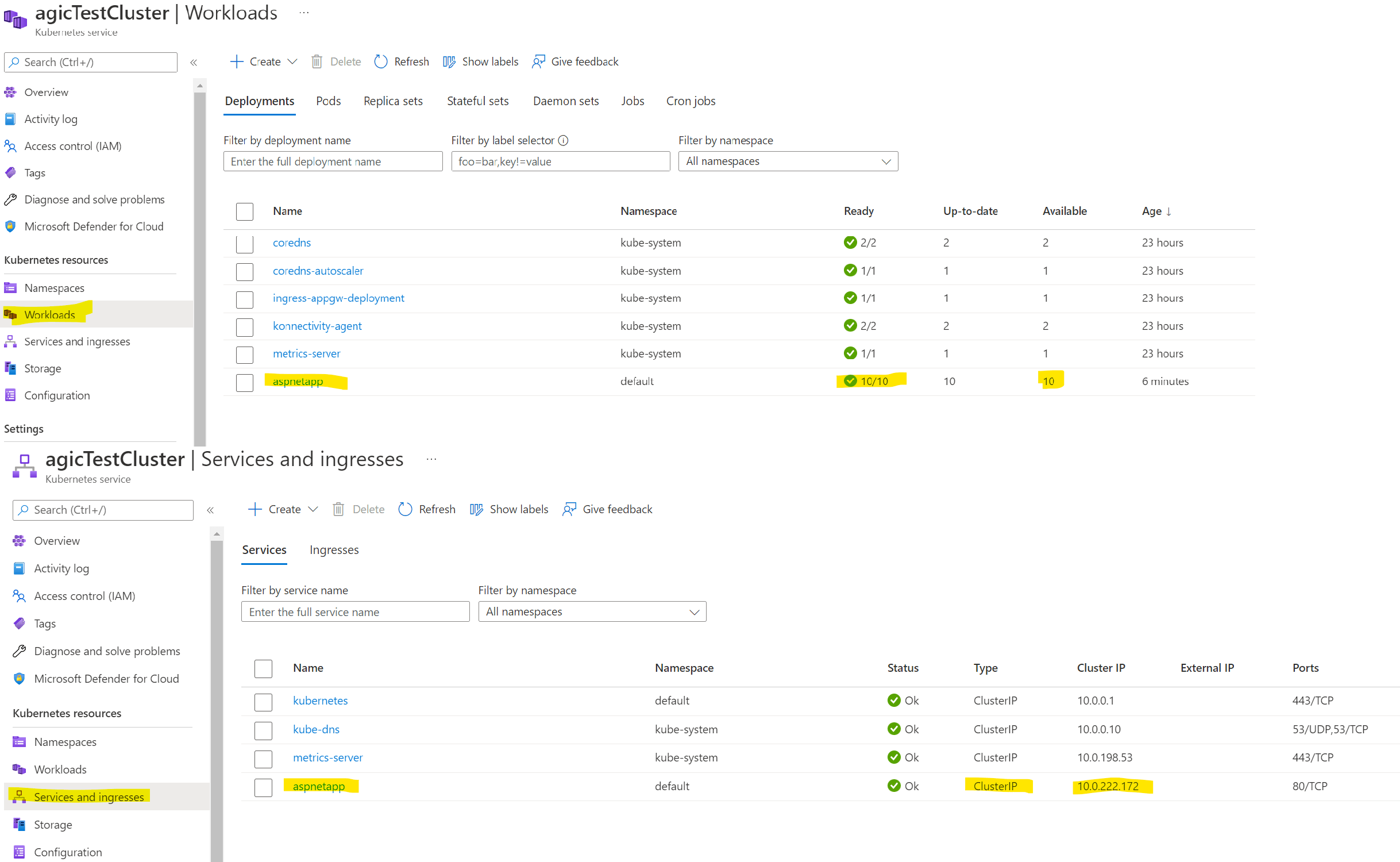

The above sample API deployment yaml was taken from the AGIC GitHub repo, the only change made to it was added a minimum of 10 replicas. We are saying we need 10 pods running this API. As soon as you run this you should see the app deployed as a service and 10 pods running successfully and there is a cluster-IPIP set for this (cluster-IP is an IP load balancer that Kubernetes creates, we just need to call this IP and our traffic will be forwarded to one of the 10 pods)

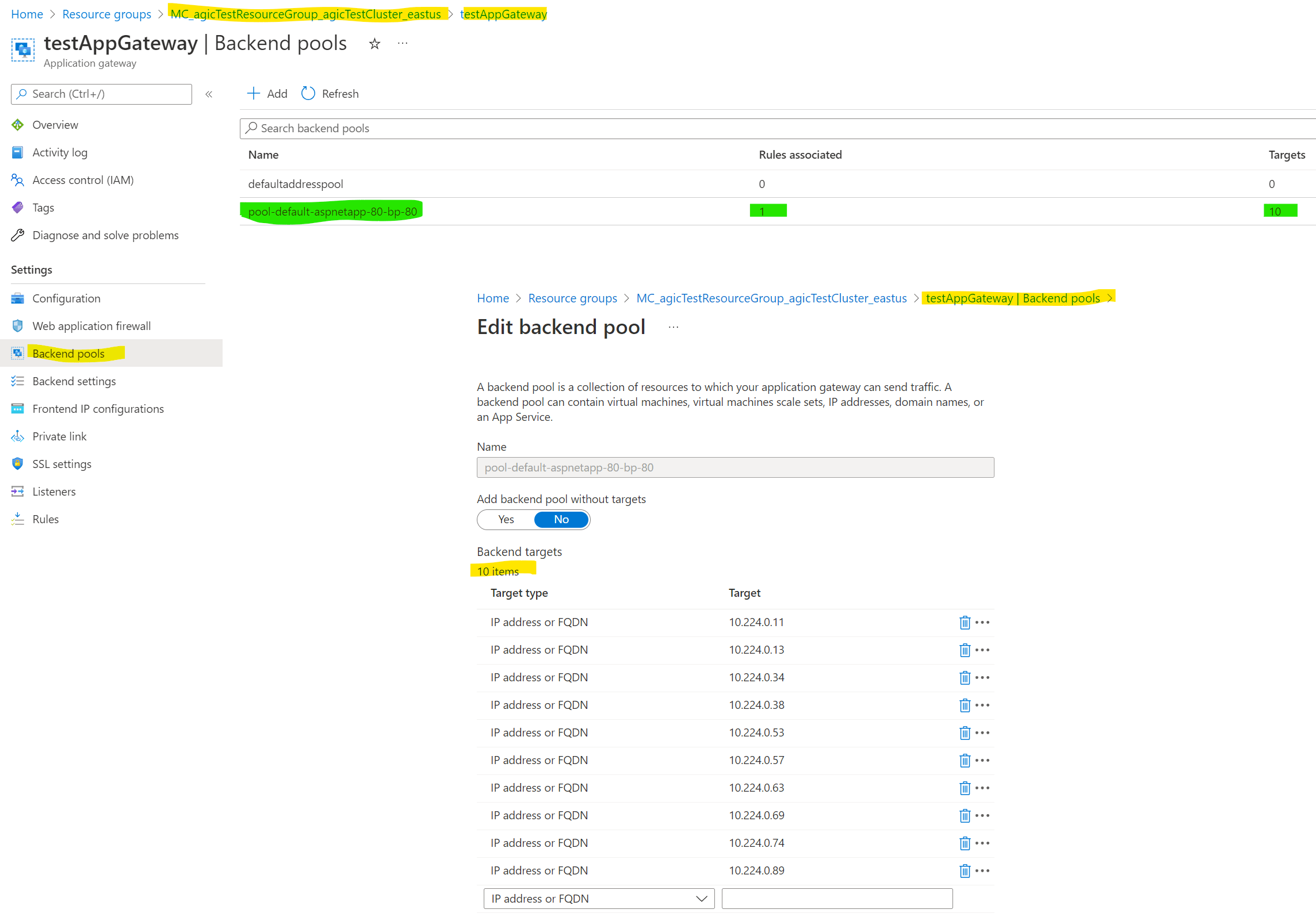

Now if we go to the resource group where we have the actual Application Gateway and go to backend pool, we can see there is one here created by AGIC and if we dig into the pool all the IP addresses of the 10 pods are listed here. So, we have direct ingress to the pods from the Application Gateway.

Finally, if we run the below command, we should see an ingress IP address for “aspnetapp” which is our sample API. This is the public IP of the Application Gateway, which has been wired up to ingress all the way to the pod. If we paste this IP into the browser, we can see sample aspnet site served from the pod.

1 | kubectl get ingress |

Right, so we have successfully ingressed all the way from public ip going via Application Gateway all the way to our pod.

Benefits of AGIC

- Direct connection to the pods without an extra hop, this results in a performance benefit up to 50% lower network latency compared to in-cluster ingress

- Could make a huge difference in performance and latency sensitive applications and workloads

- If going the AKS add-on route then it becomes fully managed and updated

- In cluster ingress consumes and competes for AKS compute/memory resources where was with App Gateway separated from the cluster it won’t be leeching any of the AKS compute

- Full benefits of the Application Gateway such as WAF, cookie-based affinity, ssl termination amongst many others

Limitations

- Application Gateway has some backend limits. Backend pools are limited to 100.

- Application Gateway does have a pricing implication

- Routing is directly to pod IP’s rather than the ClusterIP of the service. There is a feature request open for this

Closing Thoughts

Key thing to keep in mind is the backend pool limitation of 100 . If you have more than 100 “ingres-able” services, then you would need multiple Application Gateway’s to cater for this. Although it is a supported scenario and straightforward to set up multiple App Gateways for one AKS cluster, your costs will pile up.

At the start of this post, I mentioned a scenario of 2000+ services, in this case we would need 20 App Gateways; 2000 services / 100 = 20. Due to cost implications this won’t be palatable in most cases.

On the plus side you get direct connection to the pod and can shave 50% of network latency. So, in this 2000+ services in one cluster scenario we could put the App Gateway as ingress for just latency sensitive apps/API’s and use another traditional in cluster-based ingress for all the other services. This way you get the best of both words while still keeping below the App Gateway max backend pool limits.

One neat option for an in cluster-based ingress could be Web Application Routing, which is still in preview at the time of writing this. It’s a managed NGINX based solution that should work well as an in cluster-based ingress controller

References

- AGIC main documentation

- AGIC GitHub

- Main image was taken from the Azure site and slightly modified

Application Gateway Ingress Controller For AKS

https://clouddev.blog/AKS/AGIC/application-gateway-ingress-controller-for-aks/